(Guest post by Greg Frazer)

Background

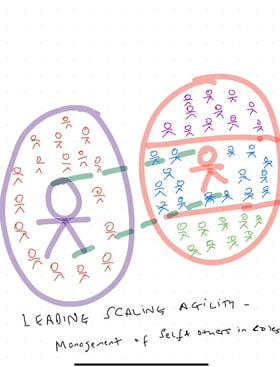

We had a polished crew of 15 Agile XPers that had been honing their skills within the methodology for an average of 5 years. We were working for GlaxoSmithKline (GSK), the second largest pharmaceutical company in the world at 100,000 employees. Dedicated to R&D IT, our primary customers were Discovery Research & Development (Early Stage) scientists that were busy building the GSK pipeline to one of the best portfolio of drug candidates in the industry. We were proud of our Agile XP results in this challenging environment where the business, science, and technology were all evolving and changing in parallel. Our flexible approach, monthly prioritization, fast feedback loops and solid customer involvement gave our applications 30-40% higher user survey scores than our non-Agile project counterparts. Similarly, our use of TDD and active customer involvement dropped our defect rates by an order of magnitude. We also won awards based on the amounts of new user growth to our applications.

Further, many of these successes were attained using distributed and/or offshore teams. Offshore teams frequently required extending iterations to two weeks, building extra communication channels, and being fanatical about encouraging open communication and actions during retrospectives. See Figure 1 for many of our Agile metrics.

Impetus for Change

With the many successes, our approach was viewed favorably overall. The team, however, was not able to get over the feeling of being stuck and occasionally bored. There were a number of things that plagued us, and we realized we didn’t have the strategic vision or learning around to help guide us further down the path.

There were process problems that seemed to either cascade or round robin. We would apply retrospective actions to our build process, for example, and then later have a resulting problem show up in testing and developers version. The recommended improvement actions seemed reasonable at the time, but were often accepted without an overall strategy or impact awareness.

We had a chronic problem related to user stories that would spill over into the next iteration (usually those with large ‘estimate vs actual’ differentials). This was despite mature story breakdown rigor and accurate velocity estimations. Besides needing to inform the UAT testers and customer sponsors of the slight change to iteration delivery, we also had the distasteful work of removing it from the release candidate code and adding it back later (as it was difficult to predict release branch places ahead of time).

While coaching many other teams to maturity around the benefits of the Agile methodology, I found it difficult to quantify the maturity of a team. Our own team was pondering questions like ‘How fast/professional are we compared to the most mature Agile teams?’. Through online blogs and conferences, I knew our team had plenty of mentors out there that had processes more mature and risk tolerant than ours. Encouraged never to compare velocities, and without other mechanisms, the most common method seemed to be the subjective rating of 'How many of the Agile practices were you doing?' (and perhaps how well you were doing them). What was absent was a vision, path, or metric that could feed the team’s thirst for improvement.

Another major frustration that I struggled with was the impact our departmental paradigms around constructing projects and in-line ‘resource’ changes had on our productivity. Our velocity was often down 40% during the project start-up, which commonly lasted two months. Towards the end of the project, we were typically working on medium to low priority work. While not explicit, it had become fairly common practice to overlap resources on projects in attempts to scrape the last parts of efficiency from our people. Because of rampant resource splitting, it was not uncommon for several resources to be covering two or three project simultaneously. From our resource managers, I was regularly fed the question ‘What would the impact of removing/adding resources have to the project?’. I grew tired of relying on speculative velocity information in response, because we really had very little idea of what change would result from losing some of the person’s primary skills. Nor was anyone frequently coming from a perspective of knowing how many efforts everyone was supporting simultaneously.

The lack of scalability of the Agile methodology also seemed to limit the progression of our success. We had success on our project teams, but were still limited by a huge portfolio item inventory for each product (much of it stagnated), low-value project creation/tear-down processes, and had not had any success expanding the methodology into the business.

A New Approach to Software

In late 2007, we started loosely tracking some interesting work spawned by David Anderson and Corey Ladas on applying Lean methods to software development. After attending Agile 2008 (Toronto) and soaking up all the presentations surrounding Lean, Kanban, Continuous Flow, and Micro Releases (Joshua Kerievsky’s talk), we saw this alternative approach as viable enough to devote learning and experiment money towards.

What pleasantly surprised me from the start was the simplicity of Lead Time and Cycle Time as concepts. Not only are they easily measured, but they are applicable at vastly different scale levels. The first scale I applied them to was our rigid notion of projects. GSK had long ago standardized on traditional PMBOK gating practices, so it was easy to gather project conception and our six gate milestone data. In the definition, I loosely defined active work (Cycle Time) as being between gates 2-4, as we didn't have funding or development resources until Gate 2 and Gate 4 represented the final release of the product. These gates represented the time period that requirements received final definition, code was created, testing was completed, and deployment was executed (both for Agile and non-Agile projects). As is evident in the Box Plot diagram, the average Lead Times (gates 1-6) were twice as much as the average Cycle Times going across all of the the project tiers (loosely defined as Tier 1: >£5M, Tier 2: £1M -£ 5M, Tier 3: £50K-£1M, Tier 4: < £50K). The only tier this was not true for was Tier 4 where the projects only averaged around three months Lead Time.

The Agile project’s feature stories were the second scale to which I applied Lead and Cycle Time metrics. The average Lead Time (conception to delivery) was three times the Cycle Time (commitment to delivery). This meant that on average our enhancement opportunities were spending two thirds of their existence essentially waiting in queue. There are also two things that make this even more spectacular. First, the only features I included in this metric were the ones that were ultimately chosen for commitment. It did not include those that were created and then deleted, or those that were still sitting awaiting priority (and may continue to do so for months). Second, usually the more important the product, the more features (inventory) were built up. The more features it had built up, the worse the average response time for the customers.

How did we get started?

With a distributed program of teams, utilizing an electronic kanban tool for collaboration and automated metric recording was an easy choice. Immediately we noticed the lack of strong tool support that offered the visible cues and fostered improvement according to the principles of Lean (at least in early 2008). We had also been looking for an excuse to put Flex on top of RoR, so we decided to build our own collaboration tool to satisfy both 'pilots'. Jeff Canna and I co-developed our Online Kanban tool during the fourth quarter of 2008 (with enhancements continuing well into the first two quarters of 2009).

In parallel, we were constantly discussing the principles of Lean, and how they would have helped us through some difficult stages in previous years if we had indoctrinated them into our arsenal of approaches.

Early on, we had our first epiphany. Here we were, a professional software development team, trying to simply form our existing work process into a simple set of steps (for columns on our visual kanban board). Surely we would be able to construct the board as the result of a quick half-day discussion. It actually took us the better part of a week with significant dedicated discussions. There was also a rapid set of evolutionary changes spread over the first two months. To some extent, this is just highlighting the non-trivial task of software development. However, we were not looking to perfectly replicate the model, just form up the basic common steps. Our difficulty with consensus highlighted how far we were from understanding the flux and instability of our current process. Frankly, it was a humbling experience, but simultaneously a great wake-up call that gave hints at as to the magnitude of the improvement opportunity.

Multiple Products, Multiple Teams, Combined Process

Dedicated to driving the Compound Screening R&D services at GSK, our full program covered two dozen applications. Often only small handfuls (5 or 6) were in active development. After three different board setups and network models, the design we found most true to our vision for this first year included three categories. The Delivery Category was a one-to-one mapping between a product and dedicated release kanban board. These boards tended to include all steps necessary to provide any final documentation (regulatory or otherwise), release communication, deployment mechanisms, and post-deployment feedback. The Opportunity Category was similar to the Delivery in that it once again mapped products directly to kanban boards. These process boards pulled strong feature concepts through to focused, detailed MMFs once open slots became available. The Development Category represented a direct mapping between a kanban board and a dedicated execution team (BA, Devs, Testers, Support,etc.). This was the most watched and active set of boards that tracked the building of features. I found that intentionally detaching the development boards from products helped in a number of ways. It meant that we did not introduce policy constraints on a team being dedicated to a product, and limited our ability to work on more products than we had teams. It also acted as a reminder to Product Owners and BAs that there was constant competition for our capacity constrained resources (development teams) and that any MMFs should be well thought out and properly prepared prior to selection. Serving multiple products for each board also gave us the opportunity to improve our process across products, rather than customizing it too much for one product. This was a recognized trade-off, but something that we enthusiastically enjoy discussing.

Learning to Pull (at least our version)

Pulling the work through the process took the team some time to grasp. Likely because it was reversing the stream from everything we had known previously. Even though everyone understood the concept of waiting until there was an open slot in the process downstream, for the first two months we had stacks of inventory piling up in multiple places (Ready for BA testing, Ready for development, Ready for Production). Our kanban board was visually screaming the message that we were a team of individuals with pipe-lined roles still pushing the river of opportunities downstream. We commonly held Work-In-Progress (WIP) of ~100 stories for a team of 15. Our first growth step came with the team’s switch of column focus from left to right, over to right to left. Meaning they simply start looking for something to do with an imminent release, and progressed backward through UAT, testing, development, design until they find something obvious to work on. Somewhat surprisingly, this was a larger paradigm shift for the BAs than the developers (and old hat for Support). The second pull growth step came soon afterward with realizing that card flow is much more important than any historical roles. Developers helped with functional testing, Scrum Masters helped with story breakdown and acceptance criteria definition, and everyone helped with a fast, quality deployment. These two steps improved flow, dropped WIP, and increased the desire to release more often than our deployment process supported at the time (several times a week). It also set up our third key growth step to pull, customers pulling through a release. A customer group would meet with our BA(s) and Support Product Owners to identify/construct a primary and secondary micro release for a product or business functional area (across products). Once identified, they would tag the user stories with the release name (also linked to a code repository version) and set up the collaboration tool to filter cards based on those release tags. Instantly, everyone in the entire value stream could easily absorb which releases where pending and what cards (defects, MMFs, small enhancements, etc.) needed to be pulled through the board to complete the release for the customers. Fortunately, because of our customers experiences with Agile, we had them used to working in smaller batches. They seemed instinctually delighted with being able to 'construct' their own releases.

Metrics and Visual Cues

I include metrics and visual cues topics together, as most of the metrics we gather are soon reflected as visual cues (or so we came to understand). Early learning suggested that if the metric is useful for one person, it is often useful for the entire team, especially if it makes our flow (or blockage) more visible or the reasons for our cycle time more evident. At the outset of our endeavor, we realized that queue limits on a kanban board were crucial differentiators (to the common task board, for instance) in support of the Lean principles. Our experience was that they were fairly easy to set and great for not wasting effort, particularly upstream. I also had the sense to understand that eventually, we would want to gather timing details, so I set up our collaboration tool to record simple history timestamps on a card entering and leaving a column. Many of the metrics that started as background curiosity, would eventually become relied upon as part of the visual fabric of our boards. Three levels of metrics dealing with flow complemented each other well and were crucial additions to the collaboration tool. Cycle time was initially constructed as a report run behind the scenes, but pushing this to the top of every individual kanban board allowed everyone to view the overall system’s progression without effort. We used the natural evolution to greater granularity to also display average time in column for cards. We found this metric helped identify the largest kaizen improvement opportunities across the system. Not only could we instantly identify the few ‘steps’ taking the longest proportion of time, but it also combined well with the overall Cycle Time to ensure the kaizen effort doesn’t create extra time elsewhere in the system. The final level shows how long a card has been in its current column. The signal on the card turns red when the card has been in that column twice the average time for that column (comparing directly to the previous metric). Besides queue limits, this was another valuable leading indicator that proactively highlights potentially blocked or difficult cards that might benefit from swarming or external collaborator attention. It is also used as a partial priority attribute, meaning when most other elements are equal for cards, choose the one with the longer time in column.

There are other report-type measures that I used when taking a larger or historical perspective. A Cumulative Flow Diagram (CFD) has been used in a few different ways to different audiences. I use the visual improvement over time to give the team a moral boost (or to demonstrate to new members that it is a gradual/incremental process). I also use it with the current team to provide quantitative perspective to a current conundrum (how does the impact compare historically). I find it immensely helpful when presenting our progress to other teams or managers as it provides WIP, Cycle Time, historic issues, and quantitative improvement results all from one view. One other practice worth mentioning is stringing boards together to gather Value Stream Map details for process efficiency calculations and broader process improvement initiatives. This helps keep the entire system in better perspective rather than keeping our focus too narrow (not to mention giving those that get bored easily an ever increasing scope for improvement opportunities).

Results

Our most satisfying results center around the improvement in feature cycle time. We were able to validate the notion that focusing the team on flow speed and much less work (inventory) does actually reward the team and customers with key benefits. Customers receive the benefits of their prioritized opportunities much faster (40 days compared to 90-100 days). More closely matching input (opportunity creation) with our team throughput resulted in much less effort preparing and scrubbing a large backlog and had more committed work items with Return On Investments (ROIs) in months rather than a year or more (only the best feature candidates). Quality also improved as the details, design, and solution are all fresh in the minds of a good cross-section of team members. Having previously had acceptance criteria and TDD in common use, the number of defects only reduced slightly. However, two other large quality differences were the response speed of the feedback loops for defects, and the level of understanding with developers (though difficult to quantify as we do not track simple explanation-seeking interactions).

In response to our own impetus for change, absorbing Lean principles and many of its methods addressed many of our problematic items (though not all).

- We have made a good number of improvements to our process, led by quantitative numbers on where the features were spending most of their time in our process. For example, column(s) that have the highest average time in column became easy process improvement targets. We shaved 6 days off our average by elevating the BA testing work time and the UAT deployment queue.

- As we are pulling small releases through and deploying only when we are ready, there’s very little smell around a time-box ending and moving in-progress code in or out of branches. Each product can release independently and whenever the release is ready.

- It is still not yet easy comparing our teams to others. However, overall cycle time for a feature, used in combination with production defect rate helps to assess a team's maturity and capability. Being able to predict and respond to environment or resource changes using quantitative data gives us the feeling that we understand our system better. Our customers have also noticed the consistently fast turnaround for all features specified at a detailed level. Moving a feature through with this speed previously took a collection of people raising a feature’s priority quickly to the top with emergency-like awareness by the delivery team.

- Being able to show management the capacity constrained resources and buffer queues in an understandable visual format gives everyone better insight into resourcing, and also gives our team the opportunity to adjust application of resources and respond quickly to bottleneck changes, or more commonly, unpredictable blips in flow areas.

- While we think the potential for scaling Lean is there, we only used it to aid in vastly reducing backlog levels and in less effort spent scrubbing it for the portfolio. We were not mature or influential enough to move our insights into the business in this time-frame.

Tragically, a re-organization that started in Q4, 2009 and bled into Q1, 2010 froze our program and progress. Our team was dispersed during the reorganization to the point that scant few of us are working together at the time of writing.

Considering the Future

I could not leave this report without mentioning our common anticipation of being able to drop our cycle time farther (probably a lot farther). The team was convinced if we were able to continue through 2010 that we could drop our average feature cycle time to 20 days. One major difference between our improvement goals prior to 2008 and our less-than 20 day feeling with Lean, was that we were not concerning ourselves with 'the how' or hesitant because of unforeseen impact events. We had firm trust that the capabilities of the whole team, combined with the Lean methods of visualization cues, encouragement of improvement event, reduced WIP and delivery focus will put another 100-200% drop in time-to-benefit within reach.